More Control for Free!

Image Synthesis with Semantic Diffusion Guidance

Arman Chopikyan2, Yuxiao Hu2, Humphrey Shi2,3, Anna Rohrbach1, Trevor Darrell1.

Paper | Slides | Github

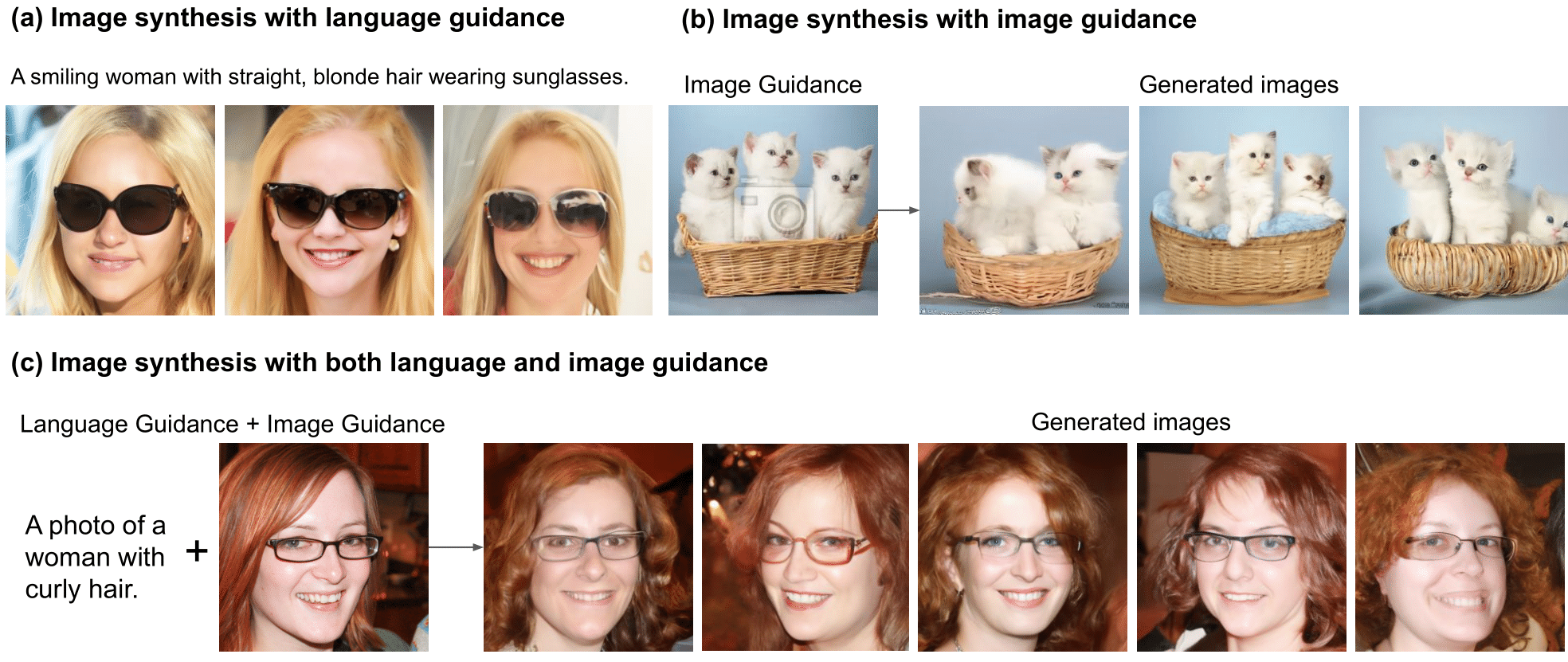

We propose a unified framework for fine-grained controllable image synthesis with either language guidance or image guidance, or both language and image guidance. Our semantic guidance can be injected to a pertained unconditional diffusion model without re-training or fine-tuning the diffusion model. The language guidance can be applied to any dataset without text annotations.

Abstract

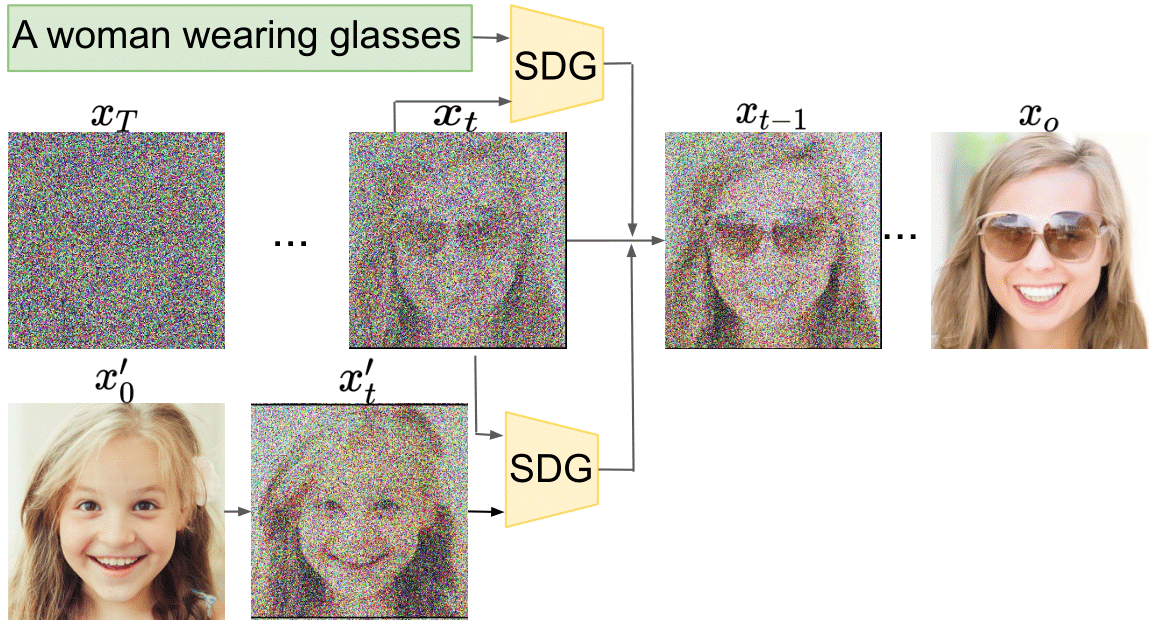

Controllable image synthesis models allow creation of diverse images based on text instructions or guidance from an example image. Recently, denoising diffusion probabilistic models have been shown to generate more realistic imagery than prior methods, and have been successfully demonstrated in unconditional and class-conditional settings. We explore fine-grained, continuous control of this model class, and introduce a novel unified framework for semantic diffusion guidance, which allows either language or image guidance, or both. Guidance is injected into a pretrained unconditional diffusion model using the gradient of image-text or image matching scores. We explore CLIP-based textual guidance as well as both content and style-based image guidance in a unified form. Our text-guided synthesis approach can be applied to datasets without associated text annotations. We conduct experiments on FFHQ and LSUN datasets, and show results on fine-grained text-guided image synthesis, synthesis of images related to a style or content example image, and examples with both textual and image guidance.

Semantic Diffusion Guidance

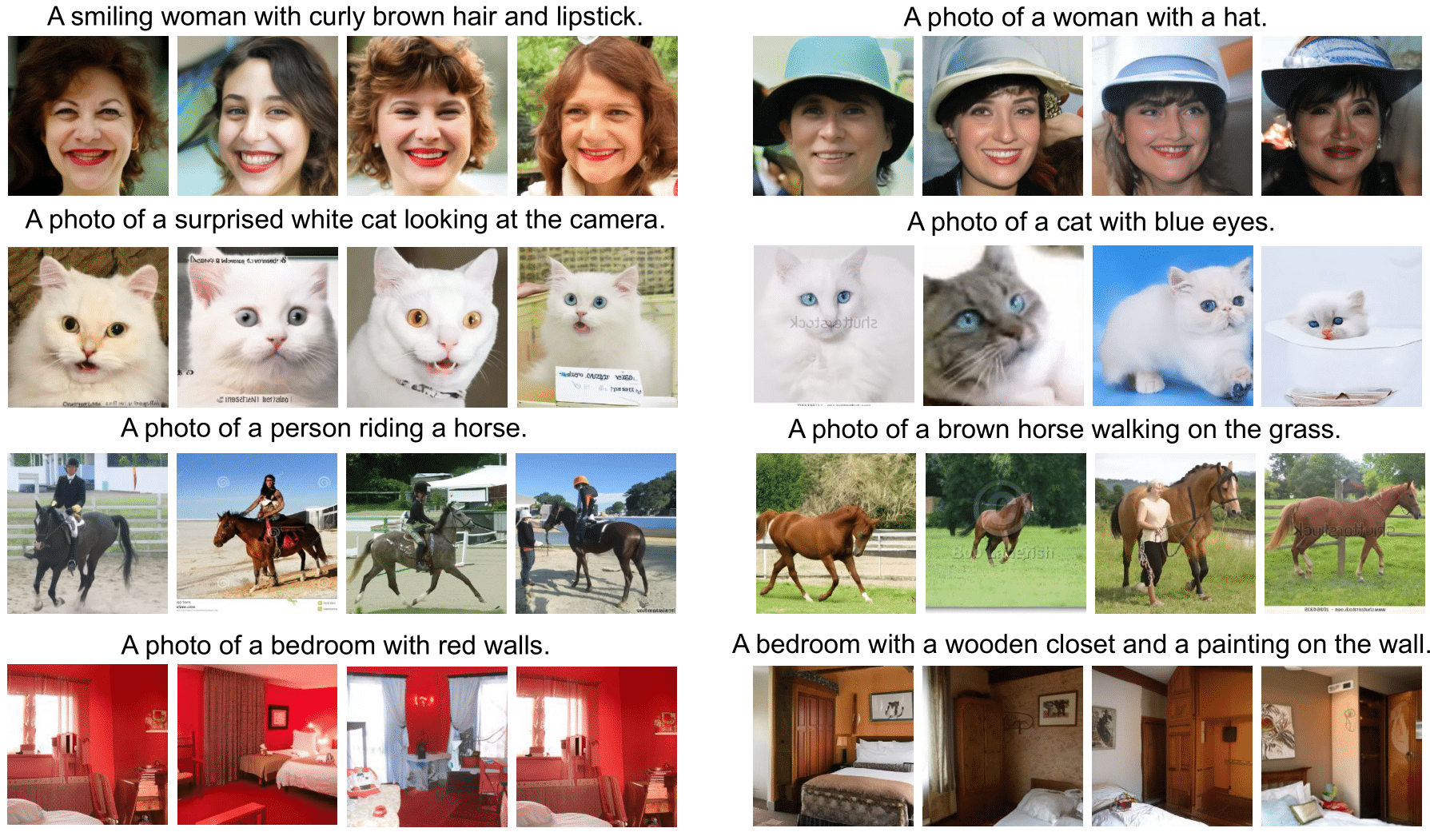

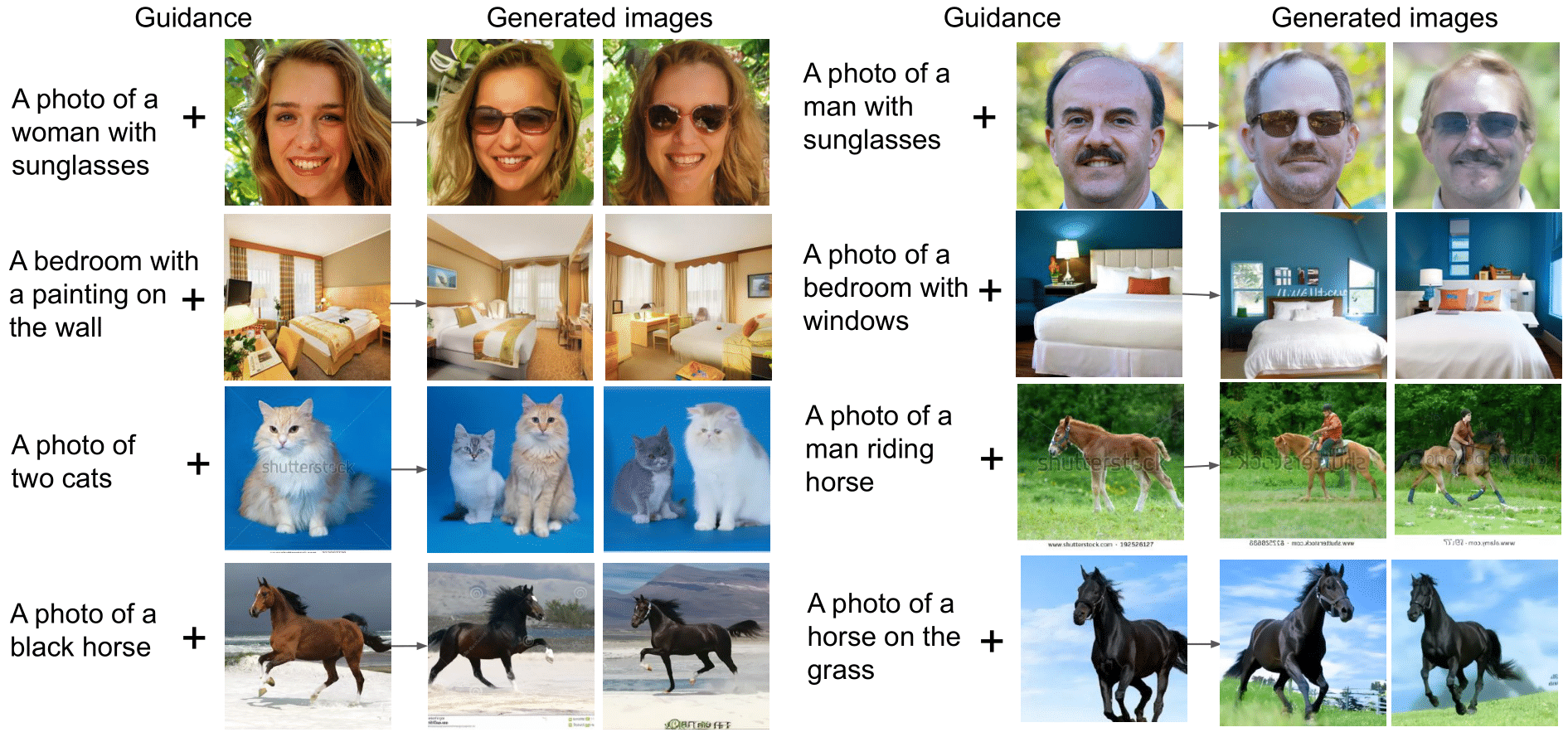

Results A: Image Synthesis with Language Guidance

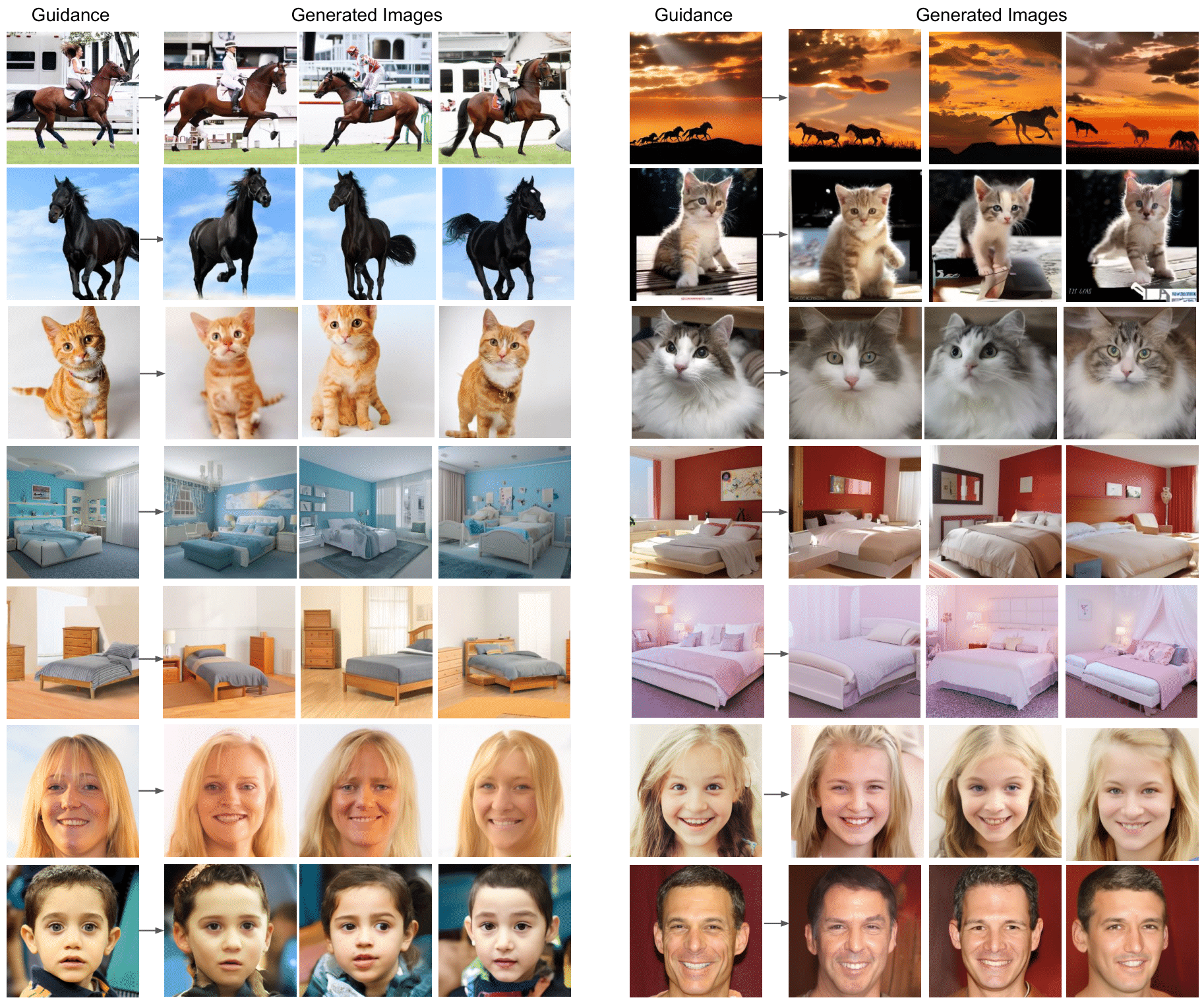

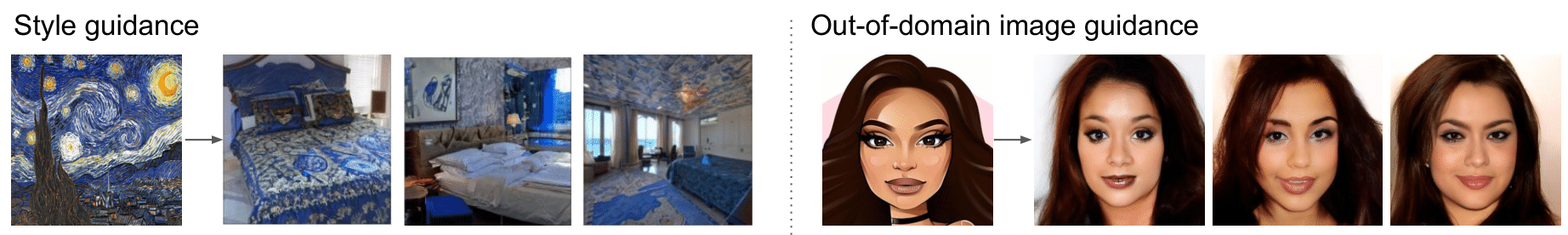

Results B: Image Synthesis with Image Guidance

Our image guidance can also be a style image guidance and out-of-domain image guidance, as shown below.

Our image guidance can also be a style image guidance and out-of-domain image guidance, as shown below.

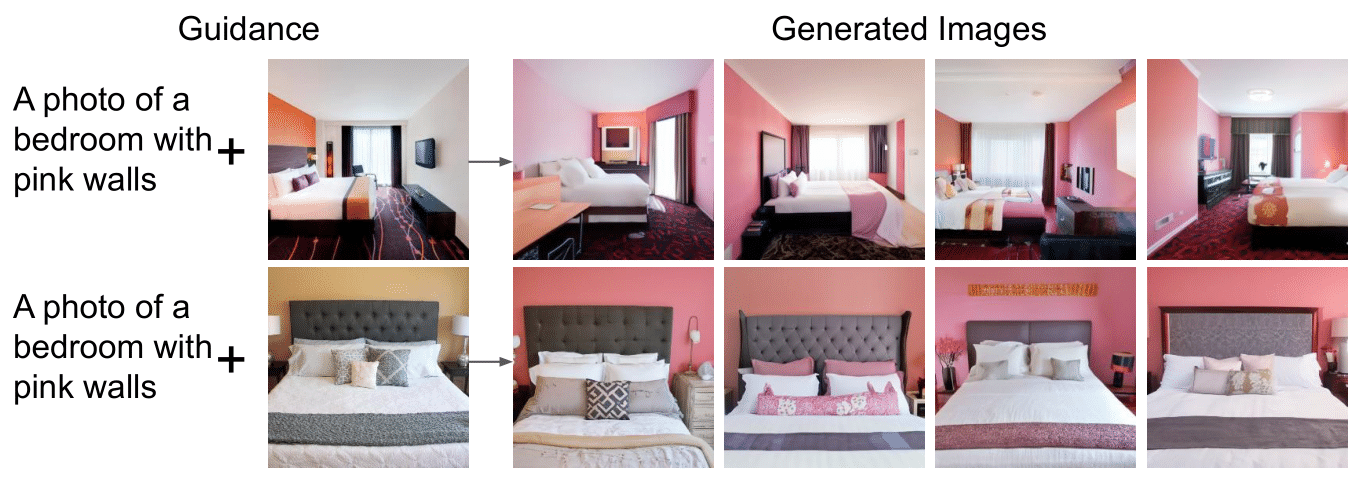

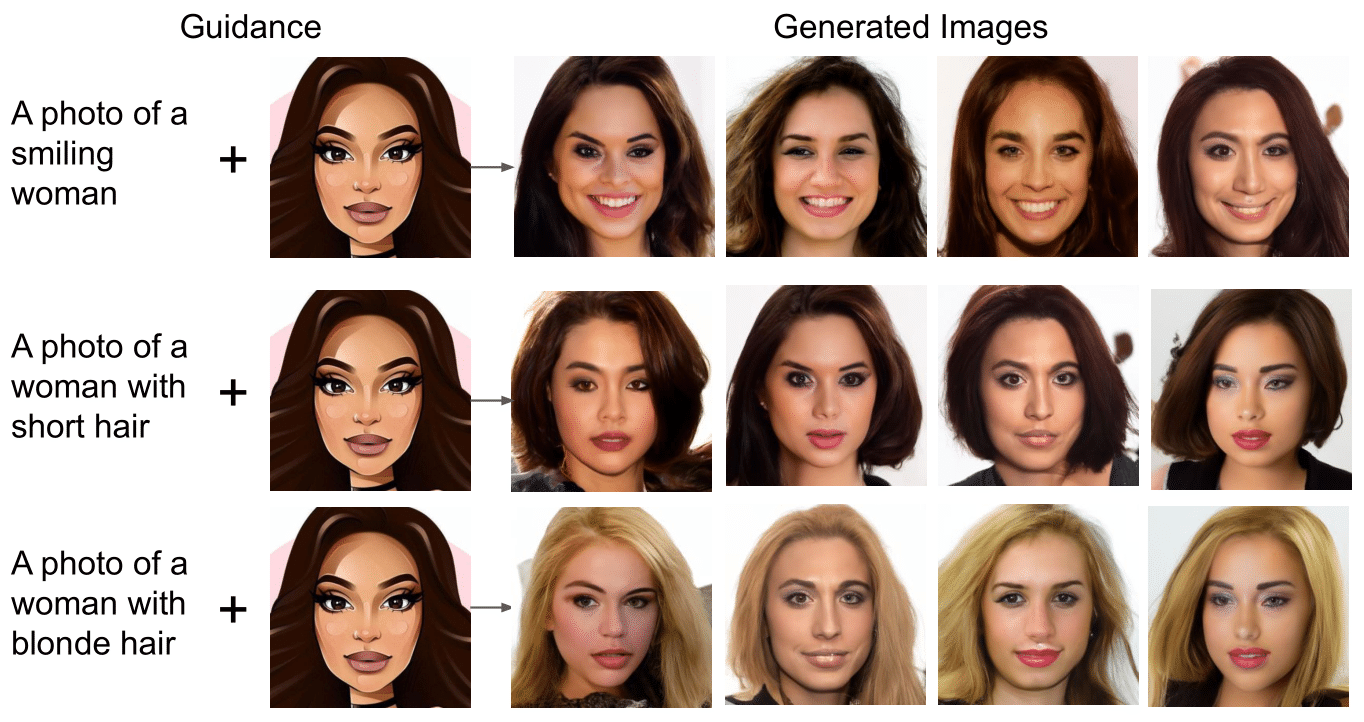

Results C: Image Synthesis with Language Guidance + Image Guidance

Examples with same language guidance + different image guidance.

Examples with different language guidance + same image guidance.

Related Work

Ho, Jonathan, Ajay Jain, and Pieter Abbeel, Denoising Diffusion Probabilistic Models, In NeurIPS, 2020.

Prafulla Dhariwal, and Alex Nichol Diffusion Models Beat GANs on Image Synthesis, In NeurIPS, 2021.

BibTex

If you find our work useful, please cite our paper:

@inproceedings{liu2023more,

title={More control for free! image synthesis with semantic diffusion guidance},

author={Liu, Xihui and Park, Dong Huk and Azadi, Samaneh and Zhang, Gong and Chopikyan, Arman and Hu, Yuxiao and Shi, Humphrey and Rohrbach, Anna and Darrell, Trevor},

booktitle={Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision},

year={2023}

}